Introduction

In our latest article we saw how Litestack provides a high performance caching solution for Ruby/Rails SQLite based applications. Today we will look at the applications themselves. Not all Ruby/Rails applications are built equally and in this post we will have a look at how the environment that Litestack runs in can impact performance drastically.

YJIT

YJIT is the official just-in-time compiler for Ruby. It basically optimizes your Ruby code behind the scenes and produces more optimized code sequences. To enable YJIT you have to first compile it while installing Ruby and for that all you need is to have a Rust compiler installed. Then when running your Ruby applications you can either pass the `–yjit` option

ruby --yjit myrubyapp.rbOr you can enable it from within your Ruby code

RubyVM::YJIT.enable

It’s worth noting that Rails will enable YJIT by default in the coming releases.

After enabling YJIT, it will automatically optimize your code, you don’t have to do more than just enabling it to benefit from it.

Fiber Scheduler (and other concurrency primitives)

Ruby has multiple concurrency primitives to support concurrent operation. These include threads, fibers, ractors and the new M:N light threads. Here’s a very quick overview of these different options:

Threads

Threads in Ruby map to native OS threads, thus they are sometimes referred to as heave weight threads. Threads are preemptive, thus they can be interrupted at any point while running Ruby code but usually not while running extension code. This means Thread programming needs to take care of things like race conditions and ensure access to shared resources between threads is properly managed.

One big issue with threads in Ruby though is that they generally run under the GVL (Giant Virtual Machine Lock) which ensures that only one thread is running at a time. This means that threads are (generally) not able to utilize multiple cores.

The main advantages of threads in Ruby though are:

- They automatically switch on IO, allowing more work to be done from a single process while blocked on IO

- They attempt to slice time fairly between different tasks, resulting in slightly higher average latencies but also better tail latencies and latency distribution in general

The disadvantages can be summed as:

- Higher memory requirements per thread

- Higher context switching cost

Fibers and The Fiber Scheduler

Fibers are much more lightweight concurrency primitives, you can think of them as methods that can be paused and resumed. Or you can think of them as non-preemptive threads. Thus they need to cooperate in order to be able to run concurrently.

A fiber runs in a single thread and is always tied to it. Thus it too cannot utilize more than one core.

Since Ruby 3.0, we have a Fiber Scheduler interface that allows automatic scheduling of fibers and automatic switching on blocking IO, thus it becomes much like threads but without the ability to time slice when doing compute.

Fibers (with Fiber Scheduler) in Ruby have the following advantages

- They are light weight and need less memory than threads

- They are much faster at switching that threads

- You don’t need to do the switching manually when using the Fiber Scheduler

- Much less chances for race conditions (except those at IO operations boundaries)

But they also come with the following disadvantages

- There is no default Fiber Scheduler implementation, you have to select and use one

- They don’t auto-switch on compute tasks, meaning they potentially finish faster but at the expense of less fair scheduling resulting in potentially higher tail latencies

- Not all libraries are taking advantage of fibers (this is changing, specially with Rails)

Ractors

Ractors are basically threads that have no GVL. They have different methods of sharing data among them that removes the need for locking the VM to a single thread. Thus they can scale to multiple cores. The main issue is that they are not compatible with a lot of Ruby code and libraries and they need sometime before they can be really useful in production applications.

M:N Threads

These are lightweight threads, built much like fibers, but can also context switch on time slices, not just IO. Their context switching is also much faster than native threads. But the current implementation relies on Ractors and hence it is still not ready for production workloads. Though once Ractors and M:N Threads become mainstream, they promise to make Ruby a very efficient platform for concurrent processing.

Litestack & the Fiber Scheduler

It is little known that Litestack attempts to detect and utilize the current concurrency environment your app is running in. It is able to detect the following environments:

Threaded

These include single threaded environments, when detected, Litestack will:

- Schedule tasks using

Thread.new - Use mutexes to control access to resources

- Make sure to respawn any created threads after forking

- Will switch on blocking operations using

sleeporThread#pass

Fiber Scheduler

Litestack will detect that a fiber scheduler is running and will then do the following:

- Schedule tasks using

Fiber.schedule - Limit the usage of mutexes to no-op in safe cases

- Switch on blocking operations using

Fiber.scheduler.yield

Polyphony

If the app is running inside the Polyphony Fiber based gem. Then Litestack will do the following

- Schedule tasks using

spin - Limit the usage of mutexes to no-ops in safe cases

- Switch on blocking operations using

Fiber.current.schedule&Thread.current.switch_fiber

As a result of these customizations, which are completely transparent to the application, Litestack gets tailors its operations to the most efficient way possible given the current environment.

As an application developer all you need to do is to pickup a Fiber Scheduler based server (e.g. Falcon) and Litestack will detect the environment and optimize its operations accordingly.

The Fiber Scheduler’s Impact

Of all the Litestack components, Litejob perhaps makes the heaviest use of concurrent background contexts. Thus it is a prime candidate to check the performance profile of different concurrency environments. We will be checking both Threaded and Fiber Scheduler based environments, which will be using the Async::Scheduler.

The test is run using both the Litejob native driver and it’s Rails ActiveJob driver as well.

The test creates and processes 10,000 jobs, which don’t do much, we measure the time, and hence the throughput of the job processing engine.

Litejob Native

| Litejob Native | Throughput (JPS) | Memory (MB) |

| Threads | 2,575 | 47.1 |

| Threads + YJIT | 2,919 | 49.6 |

| Fibers | 5,236 | 45.8 |

| Fibers + YJIT | 5,864 | 47.6 |

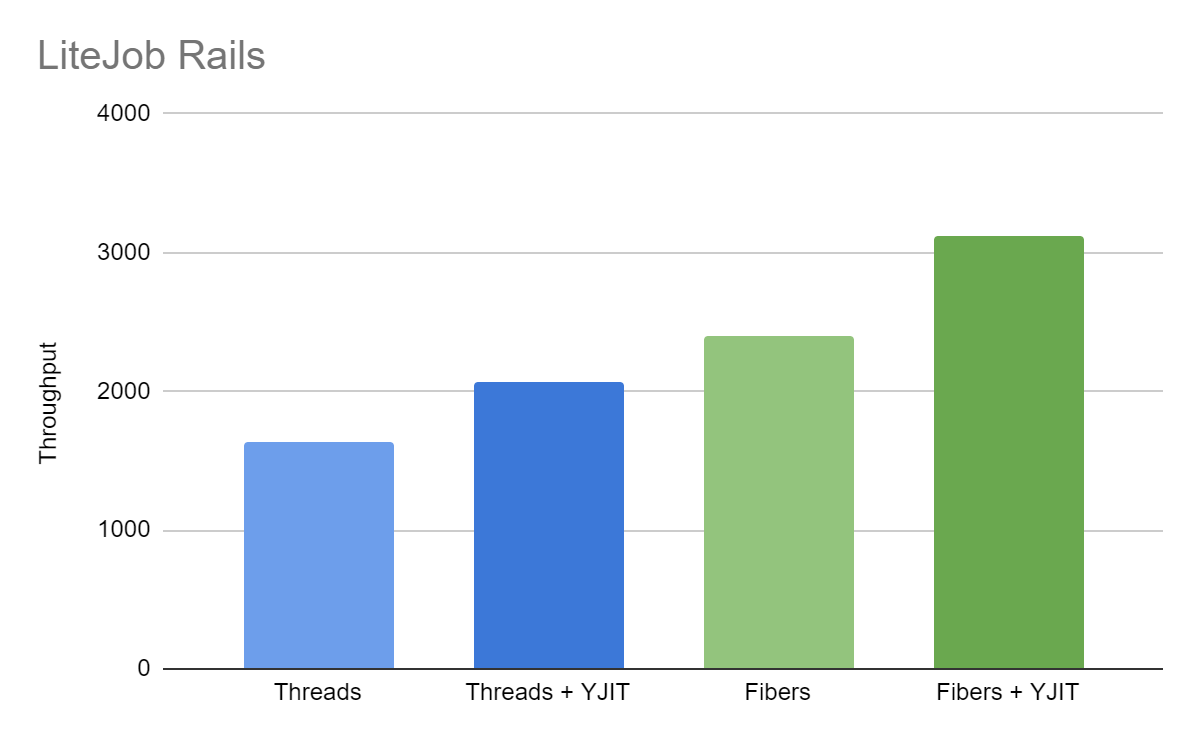

ActiveJob with the Litejob Adapter

| LiteJob Rails | Throughput (JPS) | Memory (MB) |

| Threads | 1,628 | 55.9 |

| Threads + YJIT | 2,064 | 58.8 |

| Fibers | 2,406 | 52.6 |

| Fibers + YJIT | 3,124 | 55.5 |

Ruby and Rails applications have generally been build with threads as the basic concurrency primitives. One reason is that the defacto Rails server, Puma, is thread based. Though it looks like Fiber based servers are more promising on multiple fronts:

- Faster operation

- Less memory usage

- In the case of Rails, more YJIT impact (due to the presence of more Ruby code)

You can see that, when using ActiveJob, the YJIT+Fibers combo leads to much faster operation AND slightly lower memory utilization than vanilla threads without YJIT, that’s a no compromise win on almost all fronts.

Using SQLite with Ruby is all about simplicity and efficiency. By combining that with more efficient concurrency and computation via Fibers and YJIT we complete that efficiency cycle at a very little, if any, development cost.

Leave a comment